Hello, I am Jaejin Song, DevOps Team Leader at WhaTap Labs. The DevOps team does a wide range of work, from managing WhaTap service infrastructure to CI/CD, issue handling, customer support, and technical writing. You can say that we do everything except service development.

WhaTap monitoring service collects a lot of data as it monitors tens of thousands of servers around the world and more than 1 million transactions per second in real time. The amount of data stored on the collection server disk is about 6Tb/day, and the amount is increasing every day. We would like to talk about how we have been thinking about the data, which is the core of WhaTap service, from the infrastructure perspective, not from the code perspective, to efficiently store this data.😄

Minimum storage unit project

A unit of monitoring for the WhaTap service is called a "project." A project can be a group of applications, a group of servers, or a single NameSapce, and in Kubernetes, a single NameSapce is the smallest unit. Most people organize a few servers and a few applications into a project.

Data storage is also based on projects.

With a combined storage of 6 TB/day and a total of 10,000 projects, 600 Mb/day per project is the average. When a new project is created, it is placed on the most spare VM out of the dozens of storage tens. The volumes allocated to the VM are managed by a monitoring daemon, which calls the cloud provider's API to increase the volume size when it anticipates running out of space.

Suddenly a big client

We were having a peaceful time, with services thriving and systems running smoothly with the power of automation. Then one day, we noticed a red flag in one of our large client’s Kubernetes monitoring projects: hundreds of pods were being created on a single NameSapce, and within a day, we started to accumulate over 1Tb of data.

While it is natural to scale out as the number of projects grows, it is unexpected to see so much data stored in a single project.

WhaTap monitoring service should guarantee 1 month data retention. You have homework, because you will reach the volume size limit in just a few days.

First Aid - LVM

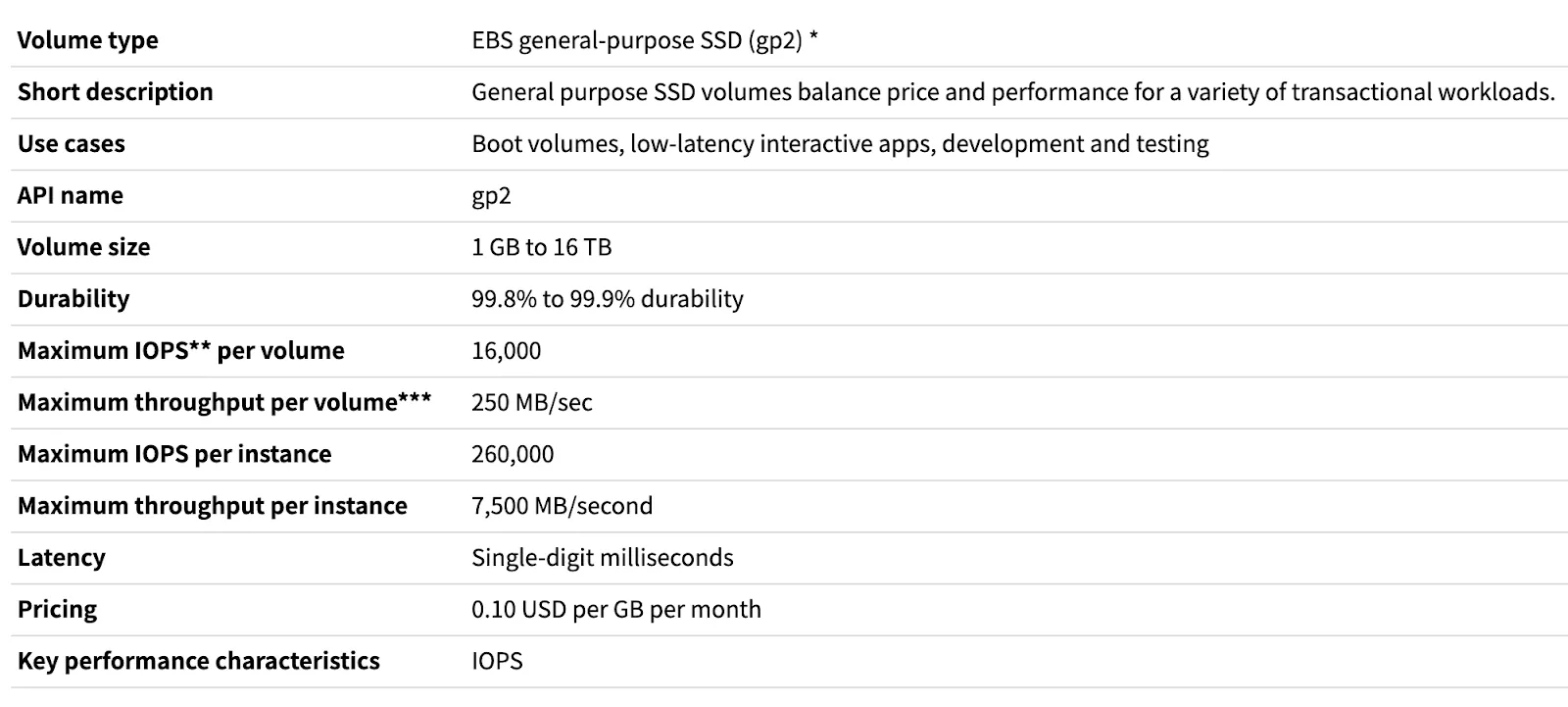

After watching the usage trend for a few days, it was clear that the volume size was going to be over 16Tb. We moved the project to a new VM, and the volume on the new VM was configured with LVM to tie the two volumes together.

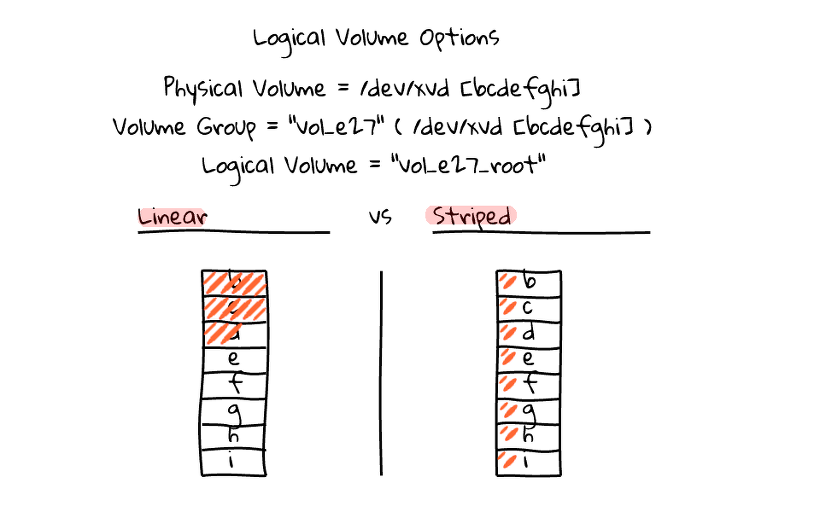

However, when it came time for the customer to eagerly retrieve the accumulated data, performance issues arose. LVM by itself doesn't cause performance degradation, but when configured as Linear with scaling in mind, only one of the two disks was being written too hard.

Linear vs Stripe

After LVM

The I/O performance degradation made me think about Stripe on LVM. Stripe on LVM is raid0, which has the same drawbacks: each volume must be the same size and cannot be resized later. This configuration greatly reduces the flexibility of LVM. While I am not worried about disk failures in a cloud environment, it is not uncommon for Stripe information to be lost due to configuration changes, so I was concerned. The nature of the storage is such that it uses thousands of IOPS at all times with random I/O and requires instant latency, so distributed file systems like HDFS and Object Storage are out of the question.

Late that night, I fell asleep thinking "Wouldn't it be great if we could configure Stripe like a linear write in LVM?".

The King of Filesystems - ZFS

On my way to work the next day, Google answered my wish: "ZFS, BTRFS, choose one". I had heard of both, but had not given them much attention or thought to try them out.

The introduction on the ZFS homepage was tremendous.

- Filesystem Native Raid Features

- Copy-On-Write transactions

- Data compression features

- Data deduplication capabilities

- Continuous data integrity checks and automatic recovery

- Read / Write Cache

- Maximum size 16EB

It is a filesystem panacea, and when I dug into the details, I thought, "Is that all there is to it?" and it looks like it is.

I decided to see if it was true.

I decided to see if it was true.

We mirrored some of the service data and created a harsh environment to accommodate a project with more than twice the production storage.

This is what our environment looks like, named "Canaria".

- AWS - m5.xlarge, gp2 2Tb, ubuntu linux 18.04

- 70% average CPU usage

- 12000 concurrent open files

- Average 5000 IOPS

- Write - 90MBps

First - btrfs vs zfs What to choose?

In a Linux environment, btrfs has many advantages for management and monitoring, but after a brief pilot, we concluded that zfs is better suited for our service.

In terms of future potential, BTRFS is the clear winner, but for now, it leaves something to be desired. In the future, btrfs will support zstd dictionary, which is more than 5 times more compressed than regular zstd, and lz4, which is the fastest. If all goes according to plan, we can replace zfs with btrfs in 2-3 years.

btrfs.wiki.kernel.org/index.php

And the support for lz4 compression is huge. lz4 in zfs has a "huge" difference in random I/O speed compared to lzo and zstd in brfs. However, if you do not need instant latency like we do, btrfs + zstd is a great choice.

gzip vs lzo vs lz4 vs zstd

Algorithms

Compression Ratio

Compression Performance

Decompression performance

gzip

2.743x

90 MB/s

400 MB/s

lzo

2.106x

690 MB/s

820 MB/s

lz4

2.101x

740 MB/s

4530 MB/s

zstd

2.884x

500 MB/s

1660 MB/s

Source: https://facebook.github.io/zstd/

Second - Does ZFS perform well compared to ext4?

In a typical environment, it is hard to notice a performance difference based on filesystem characteristics, but our repository is extremely I/O intensive.

Our storage is characterized by

- With tens of thousands of files open simultaneously, each file saves in irregular increments of a few Kb.

- Constant lookups occur during or simultaneously with saves.

Here are the results of our tests in the Canary environment

- When configured with one large volume (AWS GP2 5Tb - 250Mb / 16000 IOPS), it is much slower than ext4. This seems to be caused by copy on write (CoW) rather than journaling.

- When 4Tb was chopped into 500Gb * 8, the performance was tremendous. We believe that the Latency value is more closely related to the perceived performance than the IOPS.

- I/O performance is better when compression options are applied. The most significant performance improvement is seen when lz4 is selected as the compression algorithm.

Surprising Canary Test Results

I was very surprised by the results. There is "never" and "never" a reason not to use zfs. I was especially encouraged by the I/O performance gains with volume compression.

I was looking forward to the following benefits of switching from ext4 to zfs.

Easier volume management

- The overall volume size is reduced by a third, making it relatively easy to manage.

- Logical Volume Manager can be used at the filesystem level.

- Filesystems can be shrunk in the online mount state.

Performance

- Applying stripe can improve performance linearly with the number of disks.

- Compared to EXT4 when applying LZ4 compression

cost reduction compared to ext4

- By applying compression, the capacity is reduced by a third, so the cost of the volume is also reduced by a third.

There are some downsides, but the upsides outweigh them all. The slight increase in memory and CPU usage is a trade-off, but the performance and cost savings are so great that it's a trade-off worth making 10 times over.

Cons

- Slightly higher CPU usage when applying compression. Increase by +10

- Memory usage increases slightly.

- There is a learning curve due to the number of features and options.

Production Deployment

Since the "canary" environment showed great results, we immediately started the production deployment. The filesystem migration, which totaled over 150TB, was done sequentially over three months and was a smooth process, but there were a few missteps along the way that we will summarize.

Trial and error notes

1. Which ZFS version to choose?

There are many different versions of ZFS and many different ways to deliver modules. I have found that modules distributed with OpenZFS version 2.0x + dkms have better performance/reliability than the ZFS shipped with the distribution. Don't hesitate, use this version.

You must! Use version 2.0.x. The difference is very significant.

#On Ubuntu sudo add-apt-repository ppa:jonathonf/zfs sudo apt-get install -y zfs-dkms

2. Adjust options to suit your application.

The default values are fine, but some option tweaks can significantly increase performance. However, there is no one-size-fits-all solution, and you need to make appropriate adjustments based on the characteristics of your application, such as sequential vs. random.

For example, a large recordsize can improve compression and sequential storage performance, but at the expense of Random performance.

The guide is pretty good.

https://openzfs.github.io/openzfs-docs/Performance and Tuning/Workload Tuning.html

#Options applied to the WhaTap ingestion server

sudo zpool set ashift=12 yardbase sudo zfs set compression=lz4 yardbase sudo zfs set atime=off yardbase sudo zfs set sync=disabled yardbase sudo zfs set dnodesize=auto yardbase sudo zfs set redundant_metadata=most yardbase sudo zfs set xattr=sa yardbase sudo zfs set recordsize=128k yardbase

Trying something more drastic

We tried lowering the disk specs until it was working well in production.

The AWS region changed gp2 to gp3, which has slightly lower latency and half the bandwidth, but costs nearly 20% less than gp2. The performance difference is taken from the blog below and is not much different from what we have measured on ext4.

https://silashansen.medium.com/looking-into-the-new-ebs-gp3-volumes-8eaaa8aff33e

Azure regions have changed from premium SSDs to standard SSDs.

Standard SSDs in Azure have very low performance, actually only slightly better than HDDs.

Wow! That is enough to make a difference.

I did not expect it to work so well, especially with standard SSDs in Azure.

Since ZFS's raidz stripe scales performance linearly with the number of disks, the lower spec disks were no problem at all.

.svg)

.svg)